Criteria Refinement/OG

| Criteria Refinement | |

| Contributors | Joseph Bergin, Christian Kohls, Christian Köppe, Yishay Mor, Michel Portier, Till Schümmer, Steven Warburton |

|---|---|

| Last modification | May 17, 2017 |

| Source | Bergin et al. (2015)[1]; Warburton et al. (2016)[2][3] |

| Pattern formats | OPR Alexandrian |

| Usability | |

| Learning domain | |

| Stakeholders | |

Also Known As: Criteria Details (Criteria Details)

Refine assessment criteria to a more detailed level.

Context

You have already an Assessment Criteria List (Assessment Criteria List) with minimum requirements and maybe some Hidden Criteria (Hidden Criteria).

Problem

High level criteria are needed as goals but may be too abstract to clearly state what you expect from your students.

Forces

Students are overwhelmed if you communicate all the details and dimensions of criteria. In order to check whether high level criteria are fulfilled you need to have a more detailed list that tells you what you should look for. A clear list of criteria tells you (and your students) what is expected. It does not specify how the criteria is measured. If students do not perform well you should be able to provide feedback and explain in details what has led to a poor grade.

Solution

Therefore, refine each criterion into sub-dimensions. State which outcomes you consider to be very good or very poor in each dimension.

Think about a way how to score this (points, or levels such as ++, +, 0, -, -- ).

To measure the actual performance, you should have a finer differentiation of criteria for yourself. Aggregate the single performance grade to one overall grade. Not necessarily all detailed criteria will be taken into account. They just provide hints to you about what you should look for. It is also a good idea to express what you consider very good and what is very weak. A Performance Sheet (Performance Sheet) can help to quickly and consistently enter the details for each criteria. If students ask what specific criteria means, you can explain and exemplify the high-level criteria using the details.

Positive Consequences

Having detailed ratings for each of the criteria makes it easier to justify your grading. It can also be the base for feedback to the students. Details are good anchors and reminders what you should assess. Having several details may open alternative ways to fulfill the high level criteria and thereby account for Diversity.

Negative Consequences

If there are too many details, it is getting too complex. If the details are not communicated to students they are treated unfairly.

Example

If an assignment requires the fulfillment of several parts (e.g. research on the topic, write down requirements, define a system architecture, and write pseudo code), then each of the assigned tasks could be rated as:

- fully completed vs missing

- exhaustive vs minimalism

- correctness vs missing the goal

- well explained vs blurry

- good reasoning vs out of the blue

- standard implementation vs innovative ideas

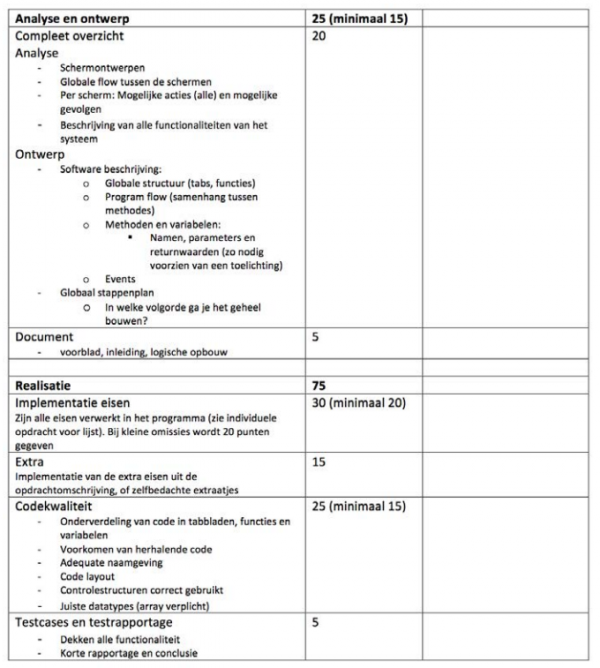

At HAN University of Applied Sciences, there is a practical assignment at the end of the introductory programming course. For the assessment of this assignment, a list with refined criteria is used. These refined criteria are given in the embedded list per main criterion as shown in the example (in Dutch).

Related patterns

Instead of prescribing criteria, let Students Define Criteria (Students Define Criteria) or let Students Guess Criteria (Students Guess Criteria). Instead of a fixed set of criteria, one can have Flexible Criteria (Flexible Criteria) and allow Multiple Paths (Multiple Paths) (allowing different achievements to reach the goal). In team work, a Performance Matrix (Performance Matrix) can make explicit who was responsible for what). This pattern is an Open Instruments of Assessment (Open Instruments of Assessment) and can be used within Constructive Alignment (Constructive Alignment).

References

- ↑ Pattern published in Bergin, J., Kohls, C., Köppe, C., Mor, Y., Portier, M., Schümmer, T., & Warburton, S. (2015). Assessment-driven course design foundational patterns. In Proceedings of the 20th European Conference on Pattern Languages of Programs (EuroPLoP 2015) (p. 31). New York:ACM.

- ↑ Patlet published in Warburton, S., Mor, Y., Kohls, C., Köppe, C., & Bergin, J. (2016). Assessment driven course design: a pattern validation workshop. Presented at 8th Biennial Conference of EARLI SIG 1: Assessment & Evaluation. Munich, Germany.

- ↑ Patlet also published in Warburton, S., Bergin, J., Kohls, C., Köppe, C., & Mor, Y. (2016). Dialogical Assessment Patterns for Learning from Others. In Proceedings of the 10th Travelling Conference on Pattern Languages of Programs (VikingPLoP 2016). New York:ACM.