E-Geo-Assessment/OG

| E-Geo-Assessment | |

| Contributors | Patricia Santos, Davinia Hernández-Leo, Toni Navarrete, Josep Blat |

|---|---|

| Last modification | May 15, 2017 |

| Source | Santos, Hernández-Leo, Navarrete & Blat (2014)[1] |

| Pattern formats | OPR Alexandrian |

| Usability | |

| Learning domain | |

| Stakeholders | |

The combination of an educational technology standard for assessment with an open web map service (WMS) enables the creation of new types of questions to enhance the assessment of geographical skills. The potential of using multimedia maps allows for inclusion of new questions not possible with paper-based tests. By answering such questions and interacting with digital geography data the students can develop important educational skills.

Problem

Geographical data has a significant role not only in geography, but also in other educational areas such as history, biology, geology and sociology. The problem presented here takes place especially in primary and secondary education. Teachers often use paper-based tests with blank maps as an assessment instrument to assess skills related with geographical information, but nowadays, this type of test is not sufficient to assess all the required skills included in an educational curriculum.

The geographical skills that a student needs to achieve have been augmented as a consequence of the popularization of web map applications (WMA) which offer functions for interacting with enriched multimedia geographical data. For the teacher, however, there is a problem in using a WMA for assessment purposes because the WMA has not been designed with educational goals in mind. So the problem this pattern tries to address is how to assess the kinds of geographical skills that students use when working with a WMA.

Context

The American National Research Council[2] supports the incorporation of Geographic Information Science across the K-12 curriculum. Geographical skills are present in the K-12 curriculum of most countries, for instance, the Spanish secondary educational curriculum indicates that students should be able to ‘identify, localize and analyse geographical elements at different cartographic scales’ and to ‘search, select, understand and relate ... geographical information from different sources: books, media, information technologies’[3]. Students should also be able to understand processes and how humans influence the environment. Teachers need to assess these skills, and this is most appropriately done through the use of technology.

Solution

Combine a WMA, e.g. Google Maps, Yahoo! Maps, OpenStreetMap, with the educational technology standard IMS Question & Test Interoperability (QTI).

The WMA has to provide an open Application Programming Interface (API). Google Maps[4] provides free access to the code of its services so that programmers can build their own applications on it, and so as an example of WMA for this pattern we will use Google Maps[4].

The importance of using an educational technology standard for assessment is to do with interoperability benefits. Educational technology standards support the deployment of compliant resources in different learning environments and facilitate the communication with other specifications or services[5]. QTI is the facto standard to develop Computer Assisted (formative and summative) Assessment solutions based on tests[6]

This combination enables us to benefit from the open aspects of an assessment standard and web map services (WMS), enabling interoperability with other learning services and standards, and the integration of large amounts of up-to-date geoinformation provided by spatial data infrastructures. The result of this combination is the extension of the assessment standard with a set of new types of questions using the multimedia content and functionalities of the WMA.

The educational technology standard for assessment defines the main elements of the assessment activity: the structure of the test, the questions, the score, the feedback, and the results. Those elements have an eXtensible Markup Language [XML] binding format so that they can be interpreted by a software engine that visualizes the test. When a question is answered by the student, the engine computes the answer and sends an outcome result. An assessment standard defines a set of elements which can be extended using other services (in this case using the functionalities of the WMA). Taking the set of questions defined in the assessment standard and the interactions that the WMA offers, new types of questions can be created by a programmer. These questions have to be interpreted by an engine compliant with the assessment standard and with the WMA service (see an example of implementation in the Verification section below).

The result of combining the WMA and the assessment standard is a set of questions with interactive maps. The student has to answer the questions by interacting with the maps (for instance: zooming in or out, dragging geographical elements, drawing over the map or interacting with specific geographical information). Teachers can use this solution to create e-questionnaires which can help students to acquire the skills described in the context section above. The sorts of new questions that are made possible are:

– Choice question: This is a form of multiple choice question using a multimedia map as an image for the question. The difference from a classical multiple choice question is the use of the configurable parameters that a WMA offers. Examples:

- Zoom: Users can change the zoom level of a map to contextualize the question.

- Drag: Users can drag the map (using four possible directions: down, up, right and left).

- Coordinates: The teacher can choose the latitude, longitude and maximum level of zoom of a map.

- Appearance: The teacher can choose the layer of the map: satellite, road map (or a combination of both) or 3D.

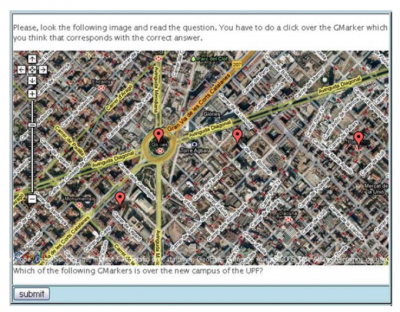

- Example: A satellite image (without text information over the map) of the island of Taiwan. The Drag functionality is activated (this means that the user can move the map which is visualized in a window). The question is: ‘What is the name of this island?’ and the four possible choices are: A. Honshu (Japan), B. Gran Canaria (Spain), C. Taiwan, D. Crete (Greece). By default the students see only the image of the island but they can use the drag functionality to explore the map in order to identify, localize and analyze other elements which characterize the zone. This type of question can also be formed using markers as choices (see an example in Figure 1).

– Order interaction: The user has to select some elements of the question following a specific sequence. In this case, the user has to click in the correct order on the markers situated over a map.

– Examples:

- A world map with the zoom functionality allowed has six markers over different regions of the world. The text question is: ‘Order (from biggest to smallest) the regions with greatest number of people without access to potable water.’

- The student can use the zoom to analyze the image in different scales, viewing the image of the entire world or a specific continent. He/she has to understand and relate the basic characteristics of the geographical diversity to be able to order the markers correctly.

– Point within a polygon: An invisible polygon has been set up to mark a region of the map. The user answers the question by dragging a marker over a region of the map. An algorithm verifies the correct position.

- Example: The question is ‘Where is Bangladesh?’ The map contains a satellite image of the entire world. The student has to use the drag and the zoom functionality to localize and identify where Bangladesh is. If the student does not understand the different cartographic scales of a map, he/she cannot identify the correct position and so cannot answer the question.

– Draw lines: The user has to draw a line (or lines) over a map as the answer to a question. As in the ‘Point within a polygon’ interaction, a polygon (invisible to the users) has been defined in the creation of the question. An algorithm determines if the line drawn by the student is within the polygon defined in the question.

- Example: The text of the question is: ‘Draw an approximate frontier between Portugal and Spain’. The image shows a satellite map of the Iberian Peninsula with the drag functionality allowed. It is necessary that the student understands the territory and uses the drag functionality to better contextualize the image. The student has to draw the frontier by clicking with the mouse within the image.

In all of these types of questions students have to relate the text and the answer of the question with the digital information shown on the map. Sometimes it is necessary to use the zoom or the drag functions in order to answer the question.

Support

Source

The source for the pattern E-Geo-Assessment (E-Geo-Assessment) is the design narrative ‘Assessment of geographical skills using interactive maps in an e-questionnaire’.[7]

Supporting Cases

An exhibition at the Pompeu Fabra University (UPF, Barcelona) was set up with the objective of informing the students about the three campuses of the UPF. Students coming from different high schools interacted with the designed e-questionnaires which had interactive maps. These e-questionnaires helped students to explore the environment of the different campuses of the UPF. The participants answered questions which contained different types of maps containing information about the university. For instance the users had to localize the new campus of the UPF on a map (see Figure 1), and they had to place the three campuses of the UPF in order of the number of students in them.

This is an example of where questions with geographical interactions can be useful in cases of informal learning, as well as in the more formal learning example described in the associated design narrative. By interacting with these types of e-questionnaires, students were exploring the maps and answering questions, and so implicitly they were learning about the environment of the university and so the objective of the UPF organizers was achieved.

Theoretical justification

Computer Assisted Assessment (CAA) is the use of computers for assessing students’ learning[5]. Information and Communication Technologies (ICT) enable the automation of assessment tasks such as marking the students’ answers, providing feedback, designing e-questionnaires, establishing a bank of items, and creating new types of questions.

In an assessment activity based on paper and pencil tests some valued skills are not easily tested by simple item formats, and as a consequence these tests do not represent the curriculum adequately[8]. New forms of answering questions are needed in order to collect new types of students’ outputs[9].

Paper-based tests are not suited to the assessment of some student skills, for instance those related with IT[10]. Unfortunately the majority of the e-assessment tools make a direct translation of the questions used in paper-based questionnaires into e-questionnaires, thus missing the potential of ICT to support new forms of testing[11]. The proposed pattern takes advantage of one specific form of the development of questions using a form of input based on spatial location.

Verification

The use of this pattern has been evaluated in a real education scenario - see the design narrative ‘Assessment of geographical skills using interactive maps in an e-questionnaire’[7] - in order to analyse the learning benefits and the attitude that students had to interacting with QTI-Google Maps tests. The teacher was interviewed and the students answered a pre-test and a post-test questionnaire (before and after interacting with the QTI-Google Maps test). Additionally three researchers made observations during the study.

The teacher found that this type of test helped him to assess the geographical skills of his students[12]. Due to the fact that the questions were assessed automatically, the teacher had more time to review the marks and special cases. Another benefit was that teachers could re-use and re-edit the questions. The students had a positive attitude to interacting with these tests. They were very familiar with web map applications (WMA) features, and the teacher was very surprised at the knowledge that the students had about these tools. The students found educational benefits from the experience, in particular they indicated that the interaction with the QTI-Google Maps test helped them to better remember the concepts, and they thought that WMAs provided enriched and up-dated information that was very useful for learning geographical concepts and for practicing geographical skills.

References

- ↑ Santos, P., Hernández-Leo, D., Navarrete, T. & Blat, J. (2014). Pattern: Blended Evaluation. In Mor, Y., Mellar, H., Warburton, S., & Winters, N. (Eds.). Practical design patterns for teaching and learning with technology(pp. 305-310). Rotterdam, The Netherlands: Sense Publishers.

- ↑ National Research Council. (2006). Learning to think spatially: GIS as a support system in the K-12 curriculum. Washington, DC: The National Academies Press.

- ↑ Spanish Government. (2006). Boletín Oficial del Estado núm. 5 Viernes 5 enero 2007 Real Decree 1631/2006. Department of Education and Science (In Spanish). Retrieved July 5, 2010, from http://www.boe.es/boe/dias/2007/01/05/pdfs/A00677-00773.pdf.

- ↑ 4.0 4.1 Google Maps. (2010). Google Maps API. Retrieved May 31, 2010, from http://code.google.com/intl/en/apis/maps/index.html>

- ↑ 5.0 5.1 Bull, J., & McKenna, C. (2004). Blueprint for computer-assisted assessment. London: RoutledgeFalmer.

- ↑ Missing reference: QTI (2006).

- ↑ 7.0 7.1 Santos, P., Hernández-Leo, D., Navarrete, T. & Blat, J. (2014). Design Narrative: Assessment of Geographical Skills Using Interactive Maps in an E-Questionnaire. In Mor, Y., Mellar, H., Warburton, S., & Winters, N. (Eds.). Practical design patterns for teaching and learning with technology(pp. 255-261). Rotterdam, The Netherlands: Sense Publishers.

- ↑ Bennett, R. E. (1993). Towards intelligent assessment: An integration of constructed-response testing, artificial intelligence and model-based measurement. In N. Frederiksen, R.. J. Mislevy, & I. I. Bejar (Eds.). Test theory for a new generation of tests. Hillsdale, NJ: Lawrence Erlbaum Associates.

- ↑ Cizek, G. (1997). Learning, achievement, and assessment: Constructs at a crossroads. In G. D. Phye (Ed.). Handbook of classroom assessment: Learning, achievement, and adjustment. San Diego: Academic Press.

- ↑ Ridgway, J., & McCusker, S. (2003). Using computers to assess new educational goals. Assessment in Education: Principles, Policy and Practice, 10(3), 309–328.

- ↑ Conole, G., & Warburton, B. (2005). A review of computer-assisted assessment. ALT-J, 13(1), 17–31.

- ↑ Navarrete, T., Santos, P., Hernández-Leo, D., & Blat, J. (2011). QTIMaps: A model to enable web maps in assessment. Educational Technology & Society Journal, 14(3), 203–217.