Try Once, Refine Once/OG

| Try Once, Refine Once | |

| Contributors | Aliy Fowler |

|---|---|

| Last modification | May 17, 2017 |

| Source | Fowler (2014)[1] |

| Pattern formats | OPR Alexandrian |

| Usability | |

| Learning domain | |

| Stakeholders | |

A two-step question answering/exercise/assessment system which encourages students to consider their initial answers to skills-based problems carefully and to subsequently, on receiving feedback on their errors, give just as much thought to the refinement process prior to submitting an improved version.

Problem

This pattern is particularly relevant to the formative assessment of skills-based courses. A typical approach in this context is to set students exercises which allow them to practise the requisite skills, and then to give feedback on errors. However, students often pay far less attention to feedback than tutors would like them to. If there is a significant delay in providing feedback the situation is further exacerbated. Even when there is little or no delay, students are often more interested in the mark received than learning where they went wrong and what to do about it. If students are not encouraged to give proper consideration to their errors, nor to correct their faulty mental models in a timely fashion, these erroneous assumptions can become fossilised.

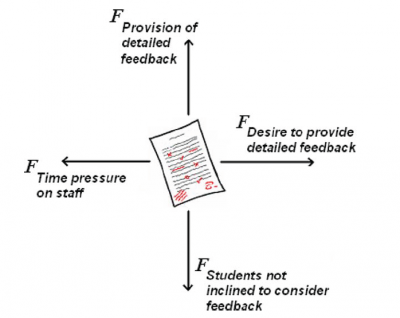

Forces

The Try Once, Refine Once (Try Once, Refine Once) pattern is an attempt to provide students with an effective incentive to correct their work (and faulty mental models) before error fossilisation occurs. In this assessment mode, students are permitted to resubmit incorrect work as soon as feedback has been received, with the incentive to do so being the possibility of an improved overall mark (since this appears to be particularly motivating).

Context

This pattern works best for skills-based learning situations where multiple misconceptions are possible. The range of assessment types the approach is suitable for encompasses those in which student answers typically contain a number of errors, for which detailed feedback indicating the source and type of each error can be provided without revealing exactly what must be done to correct them.

The pattern was originally developed for online language learning exercises and is particularly applicable to this domain, but it might also work for other skills-based fields such as mathematics, computer programming and natural sciences. The model works best if feedback can be provided fairly quickly – while the student’s mind is still on his or her answer and the thought processes that led to it. Since the pattern obviously involves each submission being marked twice, it is best applied where marking can be automated or handled in some other way that does not put additional pressure on staff (for instance where peer-marking is possible).

Solution

Whether this pattern is used with large coding assignments for Computer Science or short translation questions for language learning (or indeed any other form of assessment to which it is suited), student submissions should be marked and feedback returned within an optimum period of time.

Try: The ‘try’ step is the student’s initial attempt. If the submitted answer is entirely correct a mark of 100% is awarded and the process terminates at this point. However, if the student’s submission contains errors then an interim mark reflecting the degree of accuracy of the answer is given, along with detailed feedback on the location and type of the errors. The interim mark contributes to a percentage of the total score for the question/assignment.

Refine: If the ‘try’ submission was not completely correct then the ‘refine’ step is initiated. This allows students a second answer attempt, in which they endeavour to refine their previous submission. After this second attempt, feedback on any residual (or newly introduced) errors is given, along with the correct answer or answers. The mark for this attempt contributes to the remaining percentage of the overall question/assignment score.

The marks for the ‘try’ and ‘refine’ stages are unequally weighted, with more importance being given to the first (‘try’) attempt. The ratio can vary, but showing a distinct favouring for the first submission works best, since this ensures that students give careful consideration to all components of their first answer, and equally careful consideration to improving it in the face of the feedback. If the ratio is skewed too far in favour of the second (‘refine’) attempt then students tend to exhibit less care over the construction of their initial answer. If the ratio is skewed too far in favour of the first attempt then students are less inclined to repair trickier errors. However, the marks ratio should be adjusted according to the amount of information in the feedback – the more information it carries, the lower the portion of the mark contributed by the second attempt should be.

No further attempts at answering beyond the second are permitted. This limit, coupled with the unequal weighting of the marks for the two tries, proved to be very important. Allowing students to resubmit faulty work introduced a new problem – if multiple resubmissions were permitted some candidates adopted a mindless iterative approach to the work, in which they began with a ‘stab-in-the-dark’ and then allowed themselves to be guided step-by-step to the correct answer – often via numerous minimally altered attempts.

Support

Source

The source for the pattern Try Once, Refine Once (Try Once, Refine Once) is the design narrative ‘String comparison in language learning’.[2]

Supporting case

Post-16 string comparison[3]

Theoretical justification

One of the strengths of this pattern is that students are not given access to model answers until they have had the chance to properly consider the problems with their original submissions. In the context of language learning (the domain from which this pattern arose), Ferreira and Atkinson[4] divide feedback strategies into Giving- Answer Strategies (GAS) in which target forms corresponding to students’ errors are provided, and Prompting-Answer Strategies (PAS) in which students are ‘pushed’ to notice and ‘repair’ errors for themselves. Using different experimental settings, they found that in a tutorial context PAS seemed to promote more constructive learning.

Ferreira and Atkinson’s work enhances the findings of Nicol and Macfarlane- Dick[5] who maintain that good feedback practice includes, among other things, activities which ‘facilitate the development of self-assessment (reflection) in learning’, and which provide opportunities to ‘close the gap between current and desired performance’. Under the latter category come those activities which present opportunities to repeat a particular ‘task-performance-feedback cycle’, and allowing resubmission of work is an obvious means of doing this. Sadler[6] also stressed the importance of providing chances for students to act on feedback, because without that possibility it is impossible to tell whether or not the feedback results in learning. Boud[7] backed this up, pointing out that teachers may not be able to gauge the efficiency of their feedback if their students cannot use it to produce improved work.

This pattern addresses the issue of acting on feedback, but imposes the restriction of a single resubmission. As mentioned previously, allowing multiple attempts at answers was not found to be beneficial, since this led to the provision of feedback in an iterative fashion, hindering effective learning because students were able to ‘grope their way’ to a correct solution without having to think about each answer as a whole.

In the field of Computer Science, Malmi and Korhonen[8] in researching automatic feedback and resubmission found results indicating that allowing high to unlimited numbers of resubmissions discouraged what they called ‘active pondering’. In other words when allowed numerous resubmissions, learners did not concentrate on finding their own errors, instead they used the automatic assessment system as a sort of debugger, trying solutions to see if they worked, and if not, trying something else. In a follow-up paper[9] it was noted that when multiple submissions were permitted, about 10% of students spent an unreasonable amount of time on exercises, i.e. one that was disproportionate when measured against their examination performance.

Hattie and Timperley[10] argue that ‘The degree of confidence that students have in the correctness of responses can affect receptivity to and seeking of feedback’, and cite the work of Kulhavy and Stock[11] who noted that if confidence or response certainty is high and the response turns out to be a correct one, little attention is paid to the feedback and that feedback has its greatest effect when a learner expects a response to be correct and it turns out to be wrong. As Kulhavy and Stock noted, ‘high confidence errors are the point at which feedback should play its greatest corrective role, simply because the person studies the item longer in an attempt to correct the misconception’ (p. 225). With this pattern, the greater proportion of the marks will be given for the first attempt, and so students are likely to give answers in which they have a considerable degree of confidence – and so, if the answer is then found to be incorrect, this would be a situation where the feedback would be likely to be most effective.

References

- ↑ Fowler, A (2014). Pattern: Try Once, Refine Once. In Mor, Y., Mellar, H., Warburton, S., & Winters, N. (Eds.). Practical design patterns for teaching and learning with technology (pp. 323-327). Rotterdam, The Netherlands: Sense Publishers.

- ↑ Fowler, A. (2014). Design Narrative: String Comparison in Language Learning. In Mor, Y., Mellar, H., Warburton, S., & Winters, N. (Eds.). Practical design patterns for teaching and learning with technology (pp. 279-284). Rotterdam, The Netherlands: Sense Publishers.

- ↑ Fowler, A., Daly, C., & Mor, Y (2008). Post-16 string comparison. Retrieved October 26, 2011, from http://web.lkldev.ioe.ac.uk/patternlanguage/xwiki/bin/view/Cases/Post16stringcomparison.

- ↑ Ferreira, A., & Atkinson, J. (2009). Designing a feedback component of an intelligent tutoring system for foreign language. Journal Knowledge-Based Systems, 22(7), 496–501.

- ↑ Nicol, D. J., & Macfarlane-Dick, D. (2006). Formative assessment and self-regulated learning: A model and seven principles of good feedback practice. Studies in Higher Education, 31(2), 199–218.

- ↑ Sadler, D. R. (1989). Formative assessment and the design of instructional systems. Instructional Science, 18(2), 119–144.

- ↑ Boud, D. (2000). Sustainable assessment: rethinking assessment for the learning society. Studies in Continuing Education, 22(2), 151–167.

- ↑ Malmi, L., & Korhonen, A. (2004). Automatic feedback and resubmissions as learning aid. In Kinshuk, C. Looi, E. Sutinen, D. G. Sampson, I. Aedo, L. Uden, & E. Kähkönen (Eds.), ICALT 2004 - Proceedings of the IEEE International Conference on Advanced Learning Technologies, Joensuu, Finland. IEEE.

- ↑ Malmi, L., Karavirta, V., Korhonen, A., & Nikander, J. (2005). Experiences on automatically assessed algorithm simulation exercises with different resubmission policies. ACM Journal of Educational Resources in Computing, 5(3), Article number 7.

- ↑ Hattie, J., & Timperley, H. (2007). The power of feedback. Review of Educational Research, 77(1), 81–112.

- ↑ Kulhavy, R. W., & Stock, W. A. (1989). Feedback in written instruction: The place of response certitude. Educational Psychology Review, 1(4), 279–308.